Container Formats#

We often see videos with different file extensions: avi, rmvb, mp4, flv, mkv, and so on. These formats represent container formats. What is a container format? It is a specification that packages video and audio data into a single file. Simply relying on the file extension, it is difficult to determine the specific audio and video coding standards used.

We can view the audio and video technologies used in a video file through its properties or by using MediaInfo software.

The main purpose of container formats is to store video and audio bitstreams in a file according to a certain format.

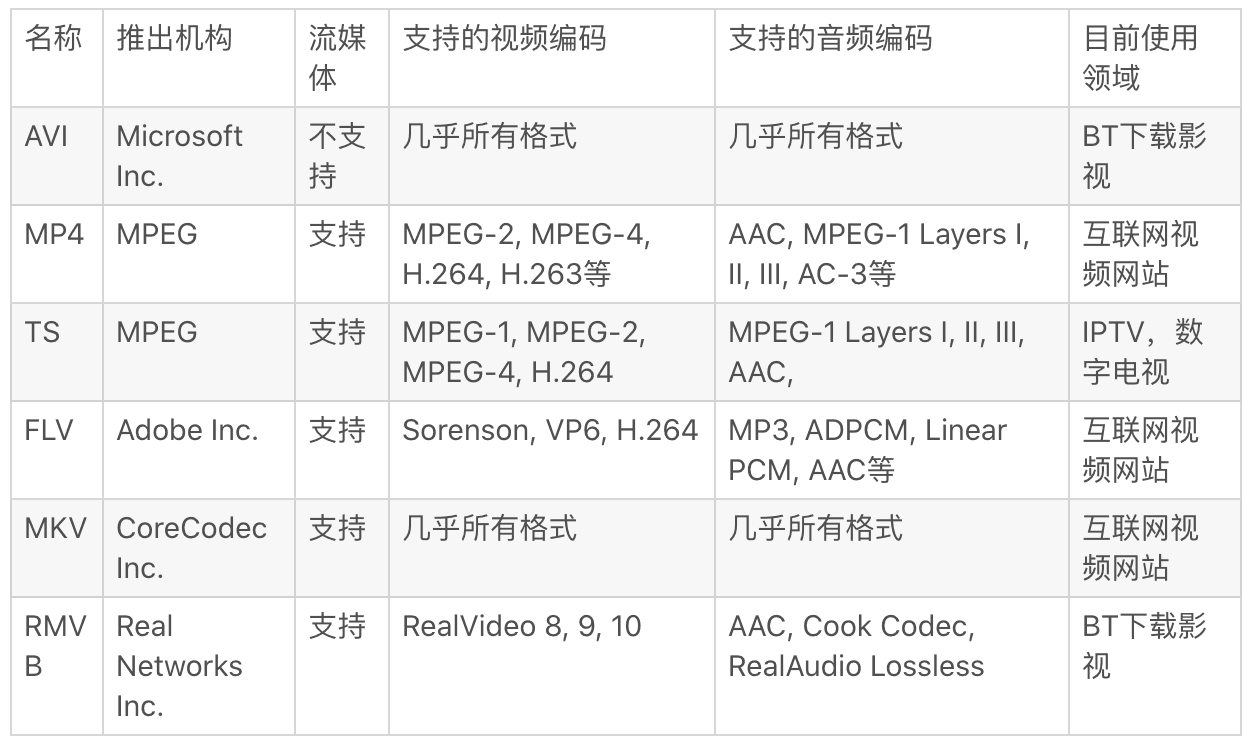

The main container formats are:

Video Player Principles#

Audio and video technology mainly includes the following points: container technology, video compression and encoding technology, audio compression and encoding technology. If considering network transmission, it also includes streaming media protocol technology.

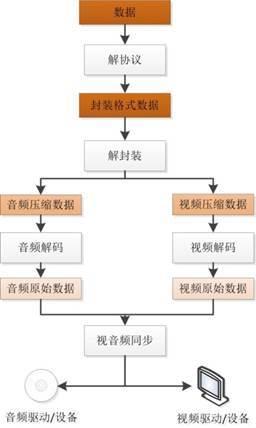

To play a video file from the internet, a video player needs to go through the following steps:

Protocol parsing, container demuxing, audio and video decoding, audio and video synchronization.

If playing a local file, the following steps are required:

Container demuxing, audio and video decoding, audio and video synchronization.

The purpose of protocol parsing is to parse the data of streaming media protocols into standard container format data. When audio and video are transmitted over the network, various streaming media protocols are often used, such as HTTP, RTMP, HLS, or MMS, etc. These protocols not only transmit audio and video data but also transmit some signaling data. This signaling data includes control of playback (play, pause, stop), or descriptions of network status, etc. During protocol parsing, the signaling data is removed, leaving only the audio and video data. For example, data transmitted using the RTMP protocol, after protocol parsing, outputs data in the FLV format.

The purpose of container demuxing is to separate the input container format data into compressed audio stream data and compressed video stream data. There are many types of container formats, such as MP4, MKV, RMVB, TS, FLV, AVI, etc. Its purpose is to put compressed video data and audio data together in a certain format. For example, after container demuxing of FLV format data, it outputs video bitstream encoded with H.264 and audio bitstream encoded with AAC.

The purpose of decoding is to decode the compressed video/audio bitstream data into uncompressed video/audio raw data. Audio compression and encoding standards include AAC, MP3, AC-3, etc., while video compression and encoding standards include H.264, MPEG2, VC-1, etc. Decoding is the most important and complex part of the entire system. Through decoding, compressed video data is output as uncompressed color data, such as YUV420P, RGB, etc., and compressed audio data is output as uncompressed audio sampling data, such as PCM data.

The purpose of audio and video synchronization is to synchronize the decoded video and audio data based on the parameter information obtained during the container demuxing process, and to play the video and audio data through the system's graphics card and sound card.

Video Encoding#

The main purpose of video encoding is to compress video pixel data (RGB, YUV, etc.) into a video bitstream, thereby reducing the data size of the video. If the video is not compressed and encoded, the size is usually very large, and a movie may take up hundreds of gigabytes of space. Video encoding is one of the most important technologies in audio and video technology. The data size of the video bitstream accounts for the vast majority of the total audio and video data. With efficient video encoding, higher video quality can be achieved at the same bitrate.

The main video encodings are: HEVC (H.265), H.264, MPEG4, MPEG2, VP9, VP8, etc.

The current mainstream encoding standard is: H.264

H.264 is just an encoding standard, not a specific encoder. H.264 only provides a reference for the implementation of encoders.

Currently, there is increasing support for H.265 as well.

Audio Encoding#

The main purpose of audio encoding is to compress audio sampling data (PCM, etc.) into an audio bitstream, thereby reducing the data size of the audio. Audio encoding is also an important technology in internet audio and video. However, in general, the data size of audio is much smaller than that of video, so even if a slightly outdated audio encoding standard is used, resulting in an increase in audio data size, it will not have a significant impact on the total data size of audio and video.

The main audio encodings are: AAC, MP3, WMA, etc.

Android platform provides support for media codecs, containers, and network protocols:

https://developer.android.com/guide/topics/media/media-formats

For example, on an Android device, the AudioRecord captures PCM audio data, which is then encoded and compressed into AAC format audio data, and then decoded into PCM for playback using AudioTrack.

Video Transcoding/Compression#

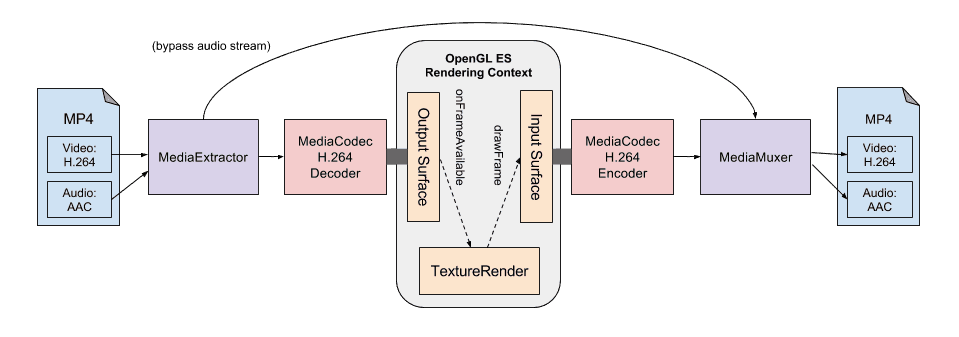

Video transcoding can be understood as decoding a video and then encoding it in a certain format.

Compressing a video is actually transcoding, re-encoding, to obtain a relatively smaller video with acceptable quality.

Basic principles of audio and video compression: https://blog.csdn.net/leixiaohua1020/article/details/28114081

So what factors affect the size of a video?

The determining factor is the bitrate (commonly used unit: kbps), which is "how much data per second". The higher the bitrate, the larger the video size. Whether it is 1080p, 720p, or any other resolution, videos with the same bitrate will have the same file size, only the image quality will differ.

There are many factors that affect the bitrate:

- With other conditions unchanged, the larger the frame size (1080p, 720p, 480p, etc.), the larger the bitrate.

- With other conditions unchanged, the higher the frame rate (25fps, 30fps, 60fps, etc.), the larger the bitrate.

- Another factor is the encoding algorithm. An excellent algorithm can achieve higher video quality at the same bitrate, with minimal loss in image quality, thereby reducing the size of the video file.

A simple formula for calculating video size:

Bitrate (kbps) x Video duration (seconds) ÷ 8 = Video file size (kb)

The library we use for video compression in our project: https://github.com/ypresto/android-transcoder

Android MediaCodec: https://developer.android.com/reference/android/media/MediaCodec

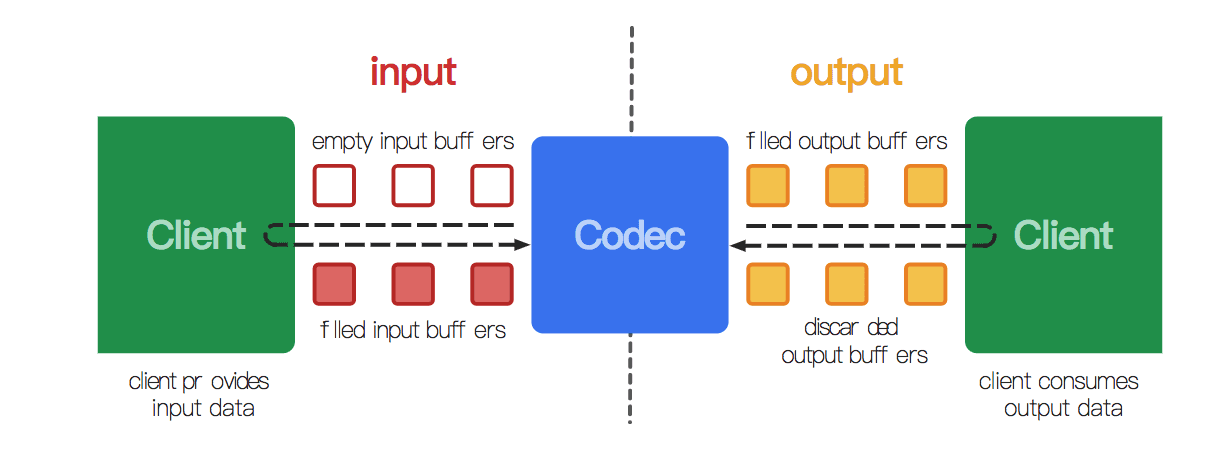

MediaCodec is a set of low-level APIs introduced by Google for audio and video encoding and decoding since API 16. It can directly utilize hardware acceleration for video encoding and decoding. When calling it, you need to initialize MediaCodec as a video encoder, and then continuously pass in the original YUV data to the encoder to directly output the encoded H.264 stream. From the perspective of API design, it contains two queues: one for the input and one for the output:

As an encoder, the input queue stores the original YUV data, and the output queue outputs the encoded H.264 stream. As a decoder, it is the opposite.

Reference: https://me.csdn.net/leixiaohua1020